미션

- CPU 사용량 처리를 AWS CLI 기반으로 개발

- 개인이 어떤 경보를 발생시킬 것인지 선택 후 완성

- S3 사용 예제 하나 완성

- S3 기술 문서 보고 직접 구축 및 데이터 저장 test 완료

- AWS 기사 또는 매뉴얼 하나 읽기

실습 1

CPU 사용량 처리를 AWS CLI 기반으로 개발

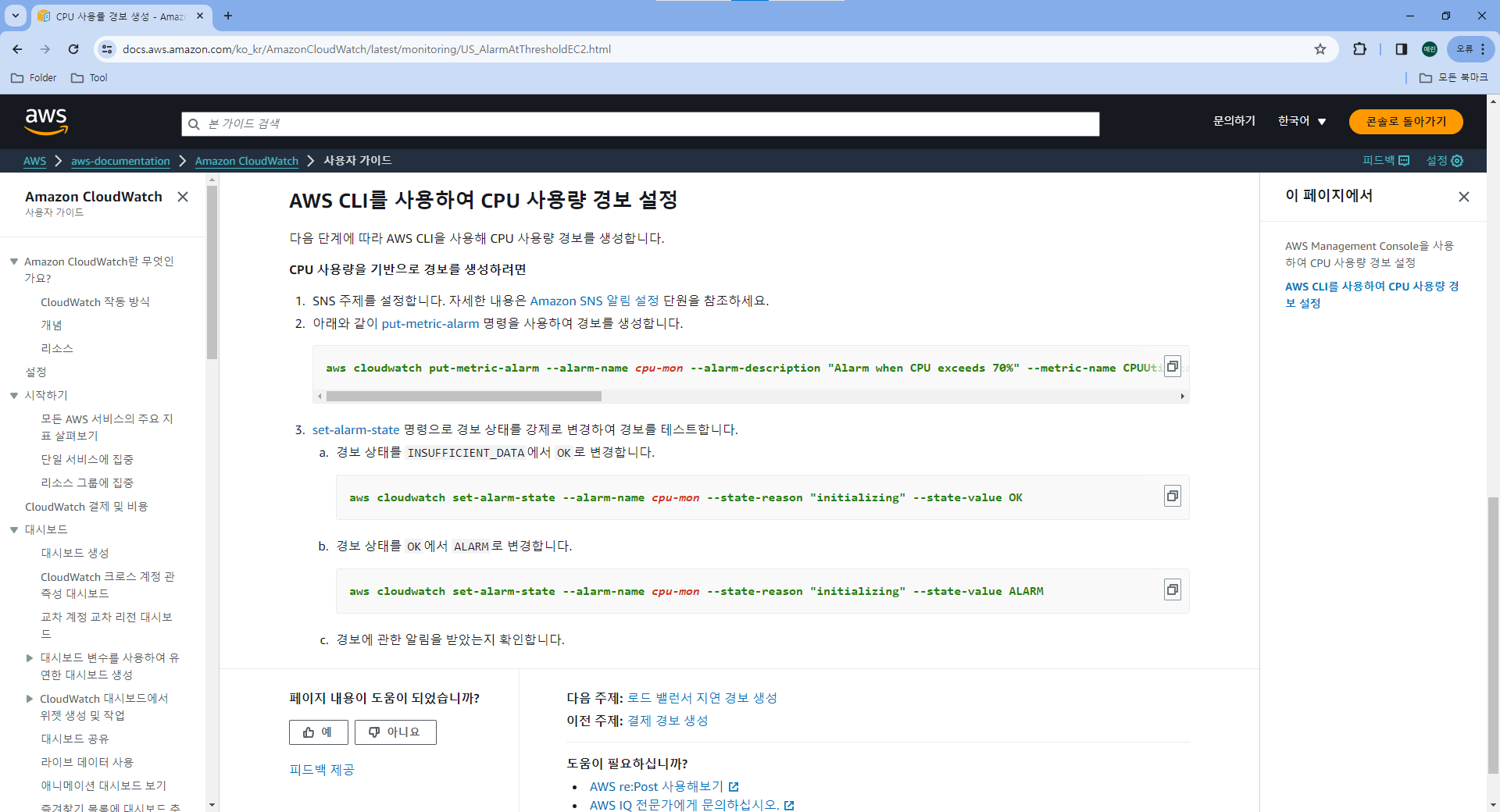

AWS CLI를 사용하여 CPU 사용량 경보 설정

공식 문서를 참고하여 실습했다.

https://docs.aws.amazon.com/ko_kr/AmazonCloudWatch/latest/monitoring/US_AlarmAtThresholdEC2.html

아래와 같이 put-metric-alarm 명령을 사용하여 경보를 생성한다.

aws cloudwatch put-metric-alarm --alarm-name cpu-mon --alarm-description "Alarm when CPU exceeds 70%" --metric-name CPUUtilization --namespace AWS/EC2 --statistic Average --period 300 --threshold 70 --comparison-operator GreaterThanThreshold --dimensions Name=InstanceId,Value=i-07df4b78fad368fcb --evaluation-periods 2 --alarm-actions arn:aws:sns:ap-northeast-2:646580111040:my-topic --unit Percent공식 문서에 나와있는 명령어이다.

aws cloudwatch put-metric-alarm --alarm-name {나의 경보 이름} --alarm-description "Alarm when CPU exceeds 70%" --metric-name CPUUtilization --namespace AWS/EC2 --statistic Average --period 300 --threshold 70 --comparison-operator GreaterThanThreshold --dimensions Name=InstanceId,Value={AWS EC2 instance id} --evaluation-periods 2 --alarm-actions arn:aws:sns:{나의 AWS 지역}:{나의 AWS id}:my-topic --unit Percent하이라이트 된 부분은 사용자에 맞게 설정해준다.

나의 경보 이름 : 원하는 이름을 지정한다.

AWS EC2 instance id : EC2 > instance > 인스턴스 ID에서 확인할 수 있다.

나의 AWS 지역 : 오른쪽 상단 지역 탭에서 확인할 수 있다. 한국은 ap-northeast-2 이다.

나의 AWS id : 오른쪽 상단 사용자 정보에서 계정 ID를 확인할 수 있다.

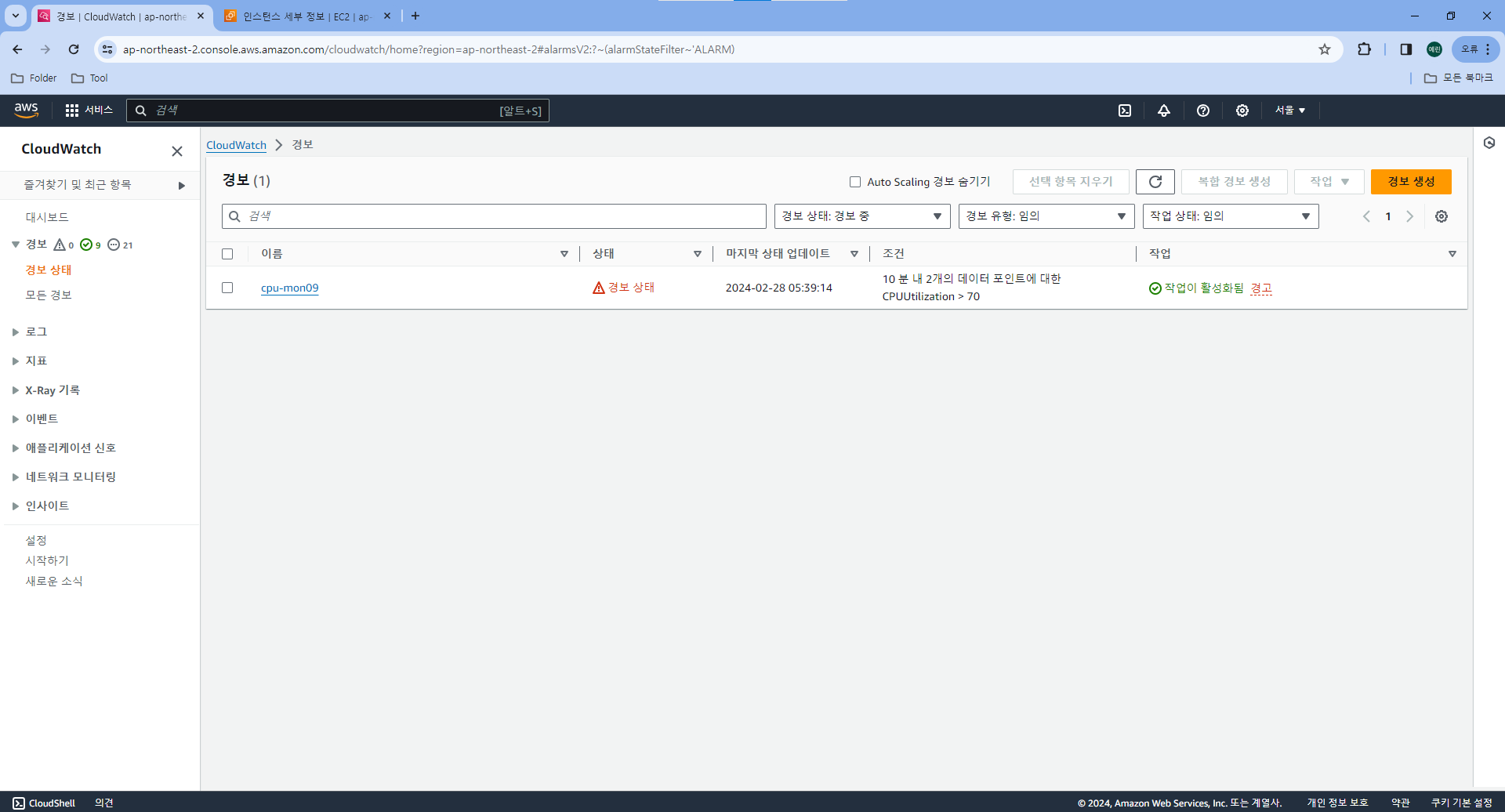

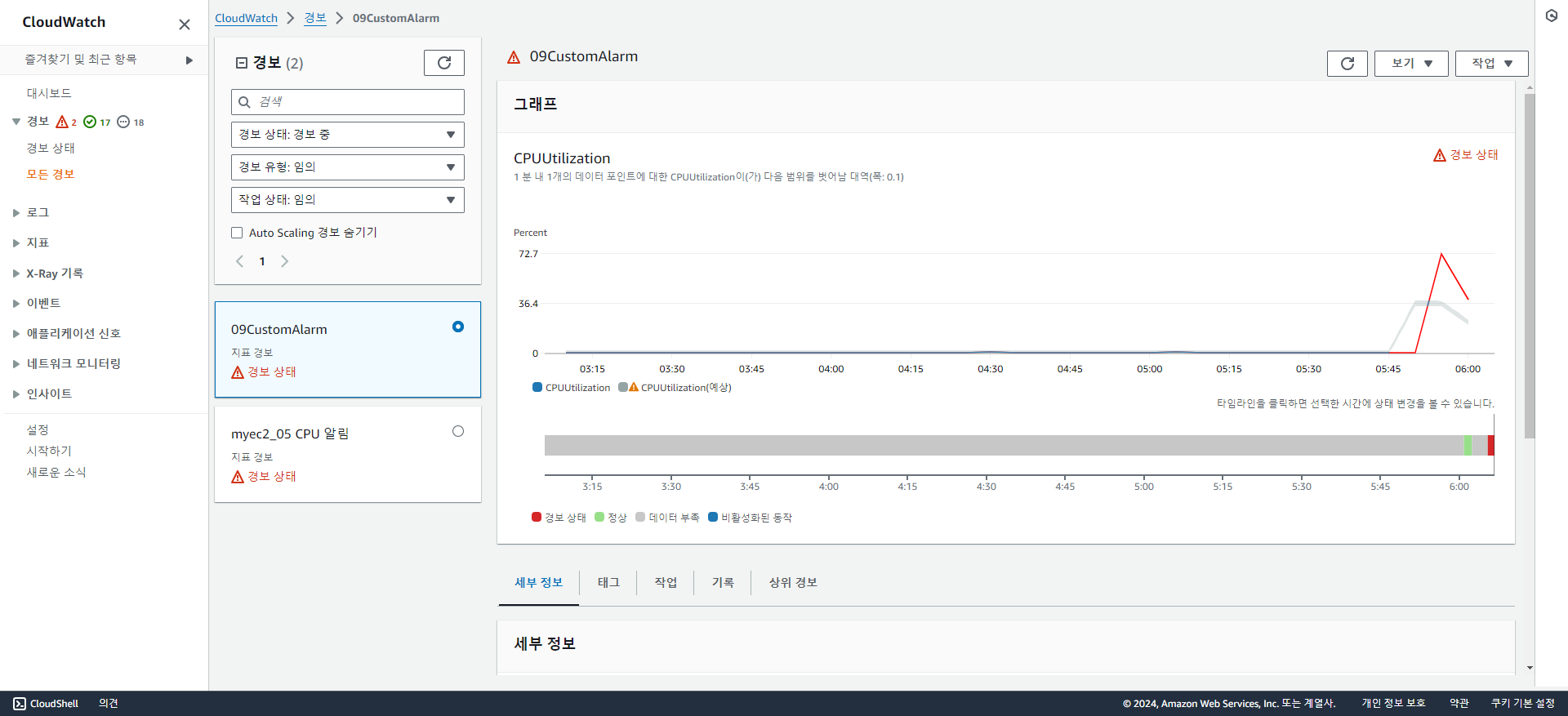

경보 테스트해보기

경보 상태를 INSUFFICIENT_DATA에서 OK로 변경한다.

경보 상태를 OK에서 ALARM로 변경한다.

Admin@1-28 MINGW64 ~

$ aws cloudwatch set-alarm-state --alarm-name cpu-mon09 --state-reason "initializing" --state-value OK

Admin@1-28 MINGW64 ~

$ aws cloudwatch set-alarm-state --alarm-name cpu-mon09 --state-reason "initializing" --state-value ALARM

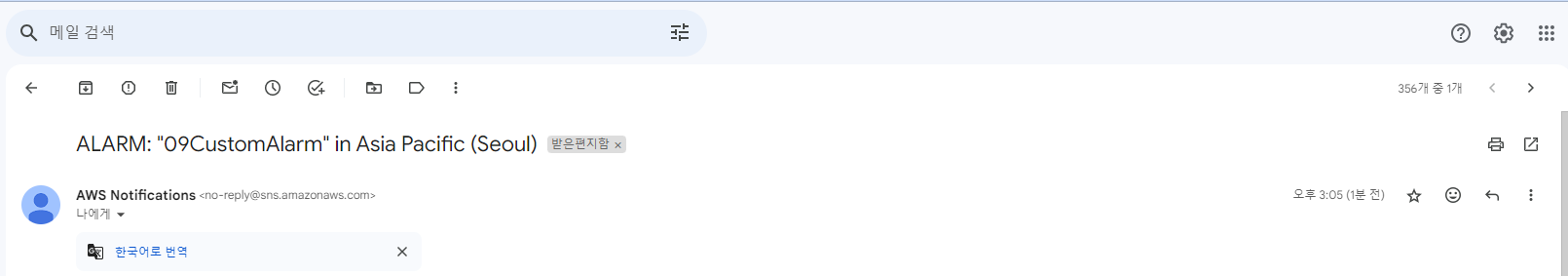

경보에 관한 알림을 받았는지 확인한다.

실습 2

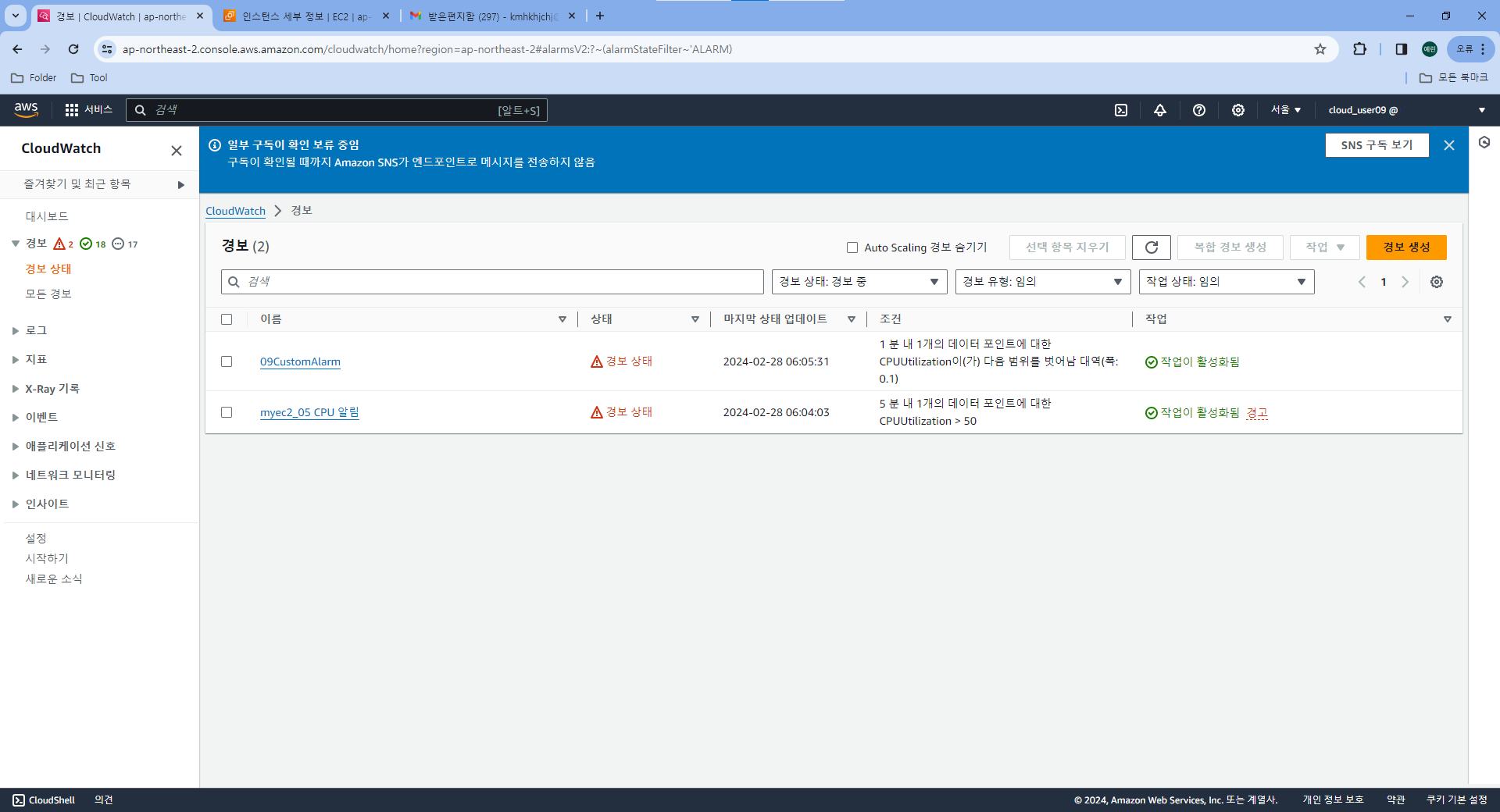

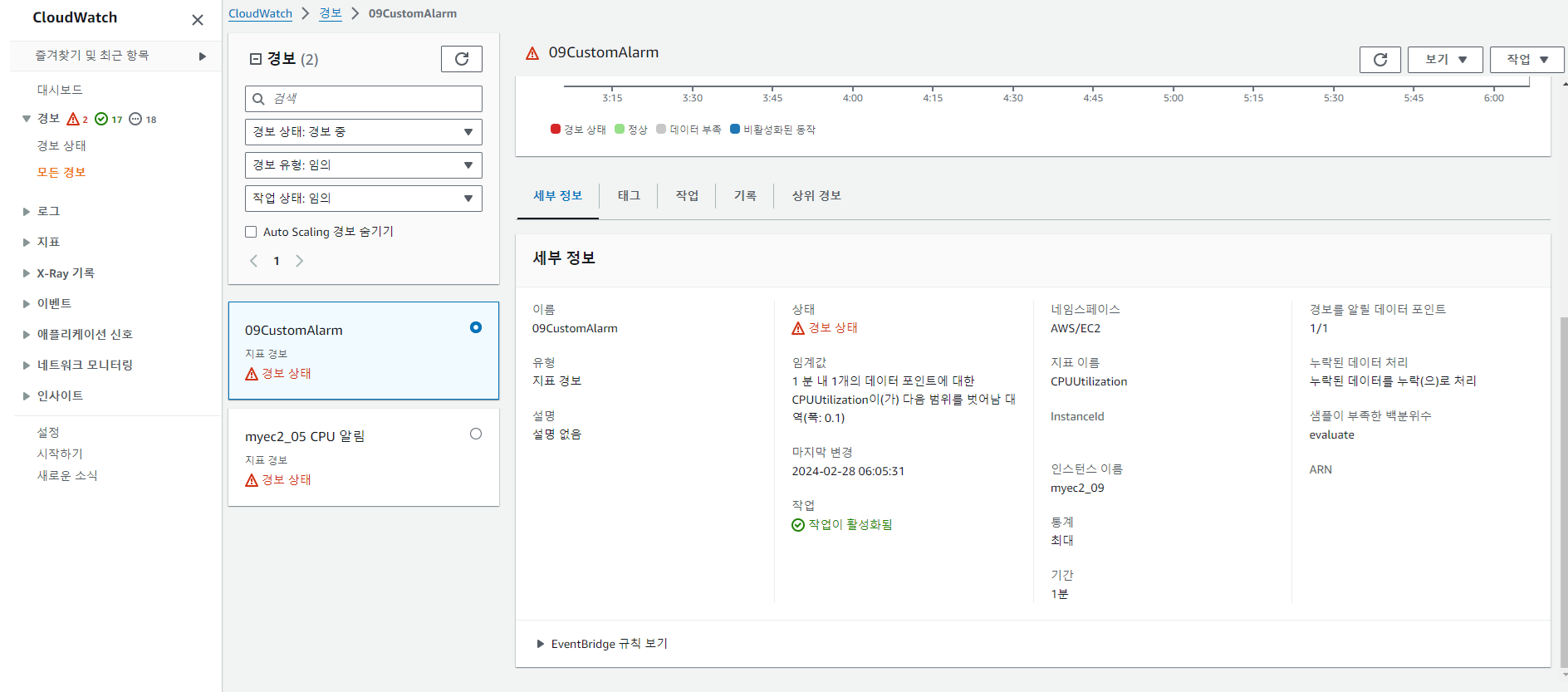

어떤 경보를 발생시킬 것인지 선택 후 완성

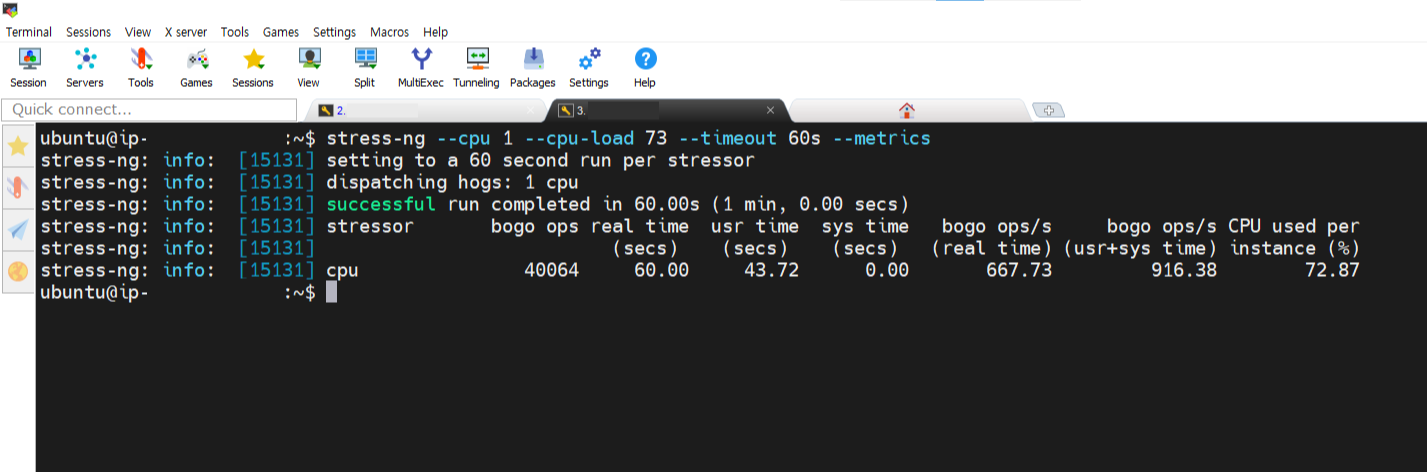

개인 커스텀 경보 발생시키기

stress-ng --cpu 1 --cpu-load 73 --timeout 60s --metrics

실습 3

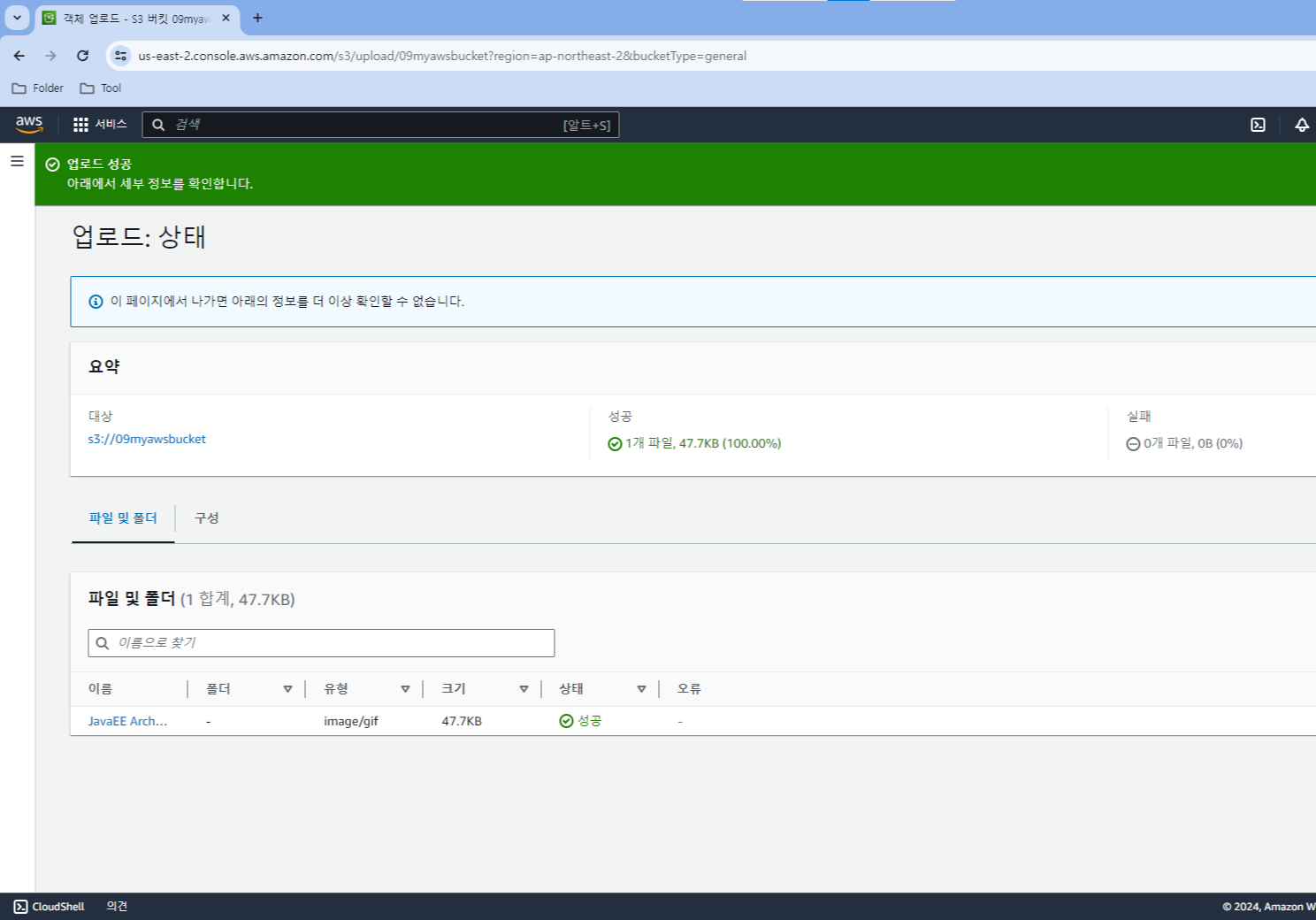

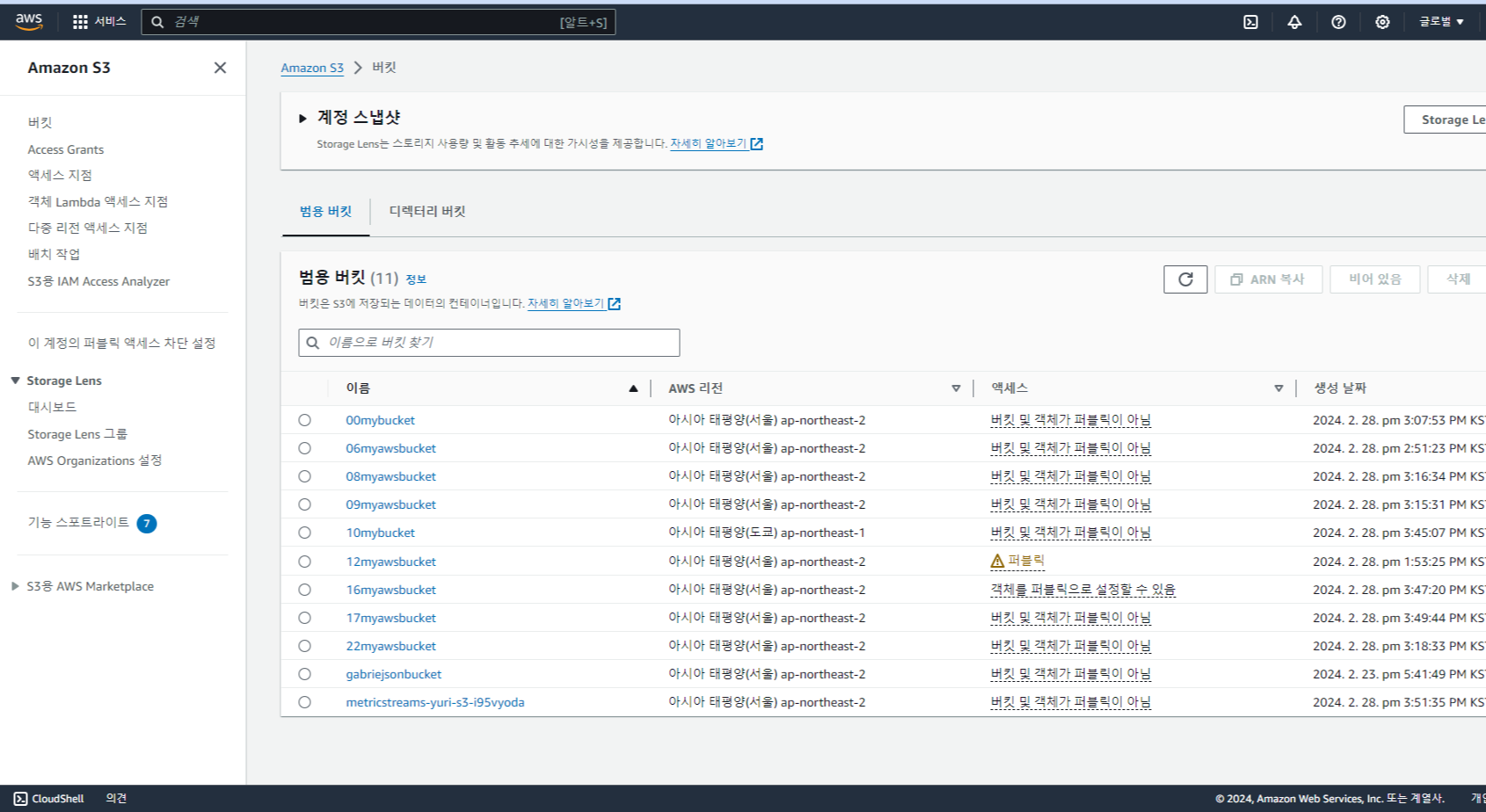

S3 기술 문서 보고 직접 구축 및 데이터 저장 test 완료

이렇게 직접 S3 버킷에 파일을 업로드할 수 있다.

https://guide.ncloud-docs.com/docs/storage-storage-8-1

Java용 AWS SDK

guide.ncloud-docs.com

위의 공식 문서를 참고하여 Java용 SDK for S3 API를 활용해보았다.

🛒 BucketService

package edu.fisa.lab;

import java.io.BufferedOutputStream;

import java.io.ByteArrayInputStream;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.OutputStream;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import com.amazonaws.SdkClientException;

import com.amazonaws.auth.AWSStaticCredentialsProvider;

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.client.builder.AwsClientBuilder;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.AmazonS3ClientBuilder;

import com.amazonaws.services.s3.model.AbortMultipartUploadRequest;

import com.amazonaws.services.s3.model.AmazonS3Exception;

import com.amazonaws.services.s3.model.Bucket;

import com.amazonaws.services.s3.model.CompleteMultipartUploadRequest;

import com.amazonaws.services.s3.model.CompleteMultipartUploadResult;

import com.amazonaws.services.s3.model.InitiateMultipartUploadRequest;

import com.amazonaws.services.s3.model.InitiateMultipartUploadResult;

import com.amazonaws.services.s3.model.ListMultipartUploadsRequest;

import com.amazonaws.services.s3.model.ListObjectsRequest;

import com.amazonaws.services.s3.model.MultipartUpload;

import com.amazonaws.services.s3.model.MultipartUploadListing;

import com.amazonaws.services.s3.model.ObjectListing;

import com.amazonaws.services.s3.model.ObjectMetadata;

import com.amazonaws.services.s3.model.PartETag;

import com.amazonaws.services.s3.model.PutObjectRequest;

import com.amazonaws.services.s3.model.S3Object;

import com.amazonaws.services.s3.model.S3ObjectInputStream;

import com.amazonaws.services.s3.model.S3ObjectSummary;

import com.amazonaws.services.s3.model.UploadPartRequest;

import com.amazonaws.services.s3.model.UploadPartResult;

public class BucketService {

final String endPoint = "s3.amazonaws.com";

final String regionName = "us-east-1";

final String accessKey = "----------------------";

final String secretKey = "--------------------------";

// S3 client

final AmazonS3 s3 = AmazonS3ClientBuilder.standard()

.withEndpointConfiguration(new AwsClientBuilder.EndpointConfiguration(endPoint, regionName))

.withCredentials(new AWSStaticCredentialsProvider(new BasicAWSCredentials(accessKey, secretKey)))

.build();

final String bucketName = "09myawsbucket";

void createBucket() {

try {

// create bucket if the bucket name does not exist

if (s3.doesBucketExistV2(bucketName)) {

System.out.format("Bucket %s already exists.\n", bucketName);

} else {

s3.createBucket(bucketName);

System.out.format("Bucket %s has been created.\n", bucketName);

}

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

}

void readBucket() {

try {

List<Bucket> buckets = s3.listBuckets();

System.out.println("Bucket List: ");

for (Bucket bucket : buckets) {

System.out.println(" name=" + bucket.getName() + ", creation_date=" + bucket.getCreationDate() + ", owner=" + bucket.getOwner().getId());

}

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

}

void deleteBucket() {

try {

// delete bucket if the bucket exists

if (s3.doesBucketExistV2(bucketName)) {

// delete all objects

ObjectListing objectListing = s3.listObjects(bucketName);

while (true) {

for (Iterator<?> iterator = objectListing.getObjectSummaries().iterator(); iterator.hasNext();) {

S3ObjectSummary summary = (S3ObjectSummary)iterator.next();

s3.deleteObject(bucketName, summary.getKey());

}

if (objectListing.isTruncated()) {

objectListing = s3.listNextBatchOfObjects(objectListing);

} else {

break;

}

}

// abort incomplete multipart uploads

MultipartUploadListing multipartUploadListing = s3.listMultipartUploads(new ListMultipartUploadsRequest(bucketName));

while (true) {

for (Iterator<?> iterator = multipartUploadListing.getMultipartUploads().iterator(); iterator.hasNext();) {

MultipartUpload multipartUpload = (MultipartUpload)iterator.next();

s3.abortMultipartUpload(new AbortMultipartUploadRequest(bucketName, multipartUpload.getKey(), multipartUpload.getUploadId()));

}

if (multipartUploadListing.isTruncated()) {

ListMultipartUploadsRequest listMultipartUploadsRequest = new ListMultipartUploadsRequest(bucketName);

listMultipartUploadsRequest.withUploadIdMarker(multipartUploadListing.getNextUploadIdMarker());

multipartUploadListing = s3.listMultipartUploads(listMultipartUploadsRequest);

} else {

break;

}

}

s3.deleteBucket(bucketName);

System.out.format("Bucket %s has been deleted.\n", bucketName);

} else {

System.out.format("Bucket %s does not exist.\n", bucketName);

}

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

}

void fileUpload() {

// create folder

String folderName = "sample-folder/";

ObjectMetadata objectMetadata = new ObjectMetadata();

objectMetadata.setContentLength(0L);

objectMetadata.setContentType("application/x-directory");

PutObjectRequest putObjectRequest = new PutObjectRequest(bucketName, folderName, new ByteArrayInputStream(new byte[0]), objectMetadata);

try {

s3.putObject(putObjectRequest);

System.out.format("Folder %s has been created.\n", folderName);

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

// upload local file

String objectName = "sample-object";

String filePath = "/tmp/sample.txt";

try {

s3.putObject(bucketName, objectName, new File(filePath));

System.out.format("Object %s has been created.\n", objectName);

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

}

void readList() {

// list all in the bucket

try {

ListObjectsRequest listObjectsRequest = new ListObjectsRequest()

.withBucketName(bucketName)

.withMaxKeys(300);

ObjectListing objectListing = s3.listObjects(listObjectsRequest);

System.out.println("Object List:");

while (true) {

for (S3ObjectSummary objectSummary : objectListing.getObjectSummaries()) {

System.out.println(" name=" + objectSummary.getKey() + ", size=" + objectSummary.getSize() + ", owner=" + objectSummary.getOwner().getId());

}

if (objectListing.isTruncated()) {

objectListing = s3.listNextBatchOfObjects(objectListing);

} else {

break;

}

}

} catch (AmazonS3Exception e) {

System.err.println(e.getErrorMessage());

System.exit(1);

}

// top level folders and files in the bucket

try {

ListObjectsRequest listObjectsRequest = new ListObjectsRequest()

.withBucketName(bucketName)

.withDelimiter("/")

.withMaxKeys(300);

ObjectListing objectListing = s3.listObjects(listObjectsRequest);

System.out.println("Folder List:");

for (String commonPrefixes : objectListing.getCommonPrefixes()) {

System.out.println(" name=" + commonPrefixes);

}

System.out.println("File List:");

for (S3ObjectSummary objectSummary : objectListing.getObjectSummaries()) {

System.out.println(" name=" + objectSummary.getKey() + ", size=" + objectSummary.getSize() + ", owner=" + objectSummary.getOwner().getId());

}

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

}

void fileDownload() throws IOException {

String objectName = "sample-object.txt";

String downloadFilePath = "/tmp/sample-object.txt";

// download object

try {

S3Object s3Object = s3.getObject(bucketName, objectName);

S3ObjectInputStream s3ObjectInputStream = s3Object.getObjectContent();

OutputStream outputStream = new BufferedOutputStream(new FileOutputStream(downloadFilePath));

byte[] bytesArray = new byte[4096];

int bytesRead = -1;

while ((bytesRead = s3ObjectInputStream.read(bytesArray)) != -1) {

outputStream.write(bytesArray, 0, bytesRead);

}

outputStream.close();

s3ObjectInputStream.close();

System.out.format("Object %s has been downloaded.\n", objectName);

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

}

void multipartUpload() {

String objectName = "sample-large-object";

File file = new File("/tmp/sample.file");

long contentLength = file.length();

long partSize = 10 * 1024 * 1024;

try {

// initialize and get upload ID

InitiateMultipartUploadResult initiateMultipartUploadResult = s3.initiateMultipartUpload(new InitiateMultipartUploadRequest(bucketName, objectName));

String uploadId = initiateMultipartUploadResult.getUploadId();

// upload parts

List<PartETag> partETagList = new ArrayList<PartETag>();

long fileOffset = 0;

for (int i = 1; fileOffset < contentLength; i++) {

partSize = Math.min(partSize, (contentLength - fileOffset));

UploadPartRequest uploadPartRequest = new UploadPartRequest()

.withBucketName(bucketName)

.withKey(objectName)

.withUploadId(uploadId)

.withPartNumber(i)

.withFile(file)

.withFileOffset(fileOffset)

.withPartSize(partSize);

UploadPartResult uploadPartResult = s3.uploadPart(uploadPartRequest);

partETagList.add(uploadPartResult.getPartETag());

fileOffset += partSize;

}

// abort

// s3.abortMultipartUpload(new AbortMultipartUploadRequest(bucketName, objectName, uploadId));

// complete

CompleteMultipartUploadResult completeMultipartUploadResult = s3.completeMultipartUpload(new CompleteMultipartUploadRequest(bucketName, objectName, uploadId, partETagList));

} catch (AmazonS3Exception e) {

e.printStackTrace();

} catch(SdkClientException e) {

e.printStackTrace();

}

}

}🍎 Step09AwsS3Application

package edu.fisa.lab;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class Step09AwsS3Application {

public static void main(String[] args) {

SpringApplication.run(Step09AwsS3Application.class, args);

BucketService b = new BucketService();

b.readBucket();

}

}

Java Project에서 S3를 적용한 결과이다.

실제 AWS S3의 버킷 정보를 확인할 수 있다.

'Infra' 카테고리의 다른 글

| [AWS] DynamoDB 테이블 생성 및 데이터 조회 (0) | 2024.02.26 |

|---|---|

| [AWS EC2] Docker로 Jenkins 설치 및 GitHub Webhook 설정 (0) | 2024.02.21 |

| [Trouble Shooting] Windows와 Linux의 파일 권한 차이 (0) | 2024.02.20 |

| [Docker] 리눅스 환경에서 컨테이너 관리하고 실행하기 (1) | 2024.02.16 |

| Ubuntu에 있는 MySQL을 로컬 Windows JDBC와 연결하기(Eclipse) (0) | 2023.12.12 |